I’m creating an AI moderator for social media using reinforcement learning (RL). My goal is to reduce toxicity/outrage and increase civility in online interaction.

Background

In my previous post, I demonstrated how an AI agent can learn to behave like a stereotypical social media user. This baseline RL environment suggests that behavior on social media is driven by the incentive structure of the platform.

Social media platforms are designed to maximize engagement. Negative feedback mechanisms that regulate toxic behavior are intentionally omitted to achieve this goal. What would happen if the incentive structure included tangible penalties for posting toxic content?

Platforms like Reddit have negative rewards in terms of thumbs down. This type of feedback can regulate behavior in environments where constructive discussion is desired. In other environments, it can nudge people to stay in echo chambers where they receive positive feedback from people with similar views. They can also weaponize the thumbs down by drowning out opposing views, regardless of whether they are toxic or not.

An alternate approach is to introduce an economic system, where posting content costs money. In the simulation environment, money is represented by credits. Each post costs one credit. An agent earns one credit every time step, no degrading #woof required.

Agents are rewarded with additional credits based on the popularity of their posts. However, this reward is attenuated based on the post toxicity. Extremely toxic posts don’t earn anything, so toxic agents will run out of credits and need to wait until they accumulate enough credits to post again. Agents therefore need to balance post popularity with civility.

Given these competing goals, my hypothesis was that the agent would learn to be more objective. Using the metric mean absolute polarity in [0,1], I presumed the agent would settle somewhere in the middle.

Results

A penny for your thoughts

How does the agent behavior change given the introduction of a credit system? With more sophisticated dynamics, it takes more episodes for the agent to learn. At 10 episodes, its behavior looks similar to the baseline Twitter agent. The main difference is that the mean absolute polarity is significantly lower than in the initial experiment and ends at 0.6377843.

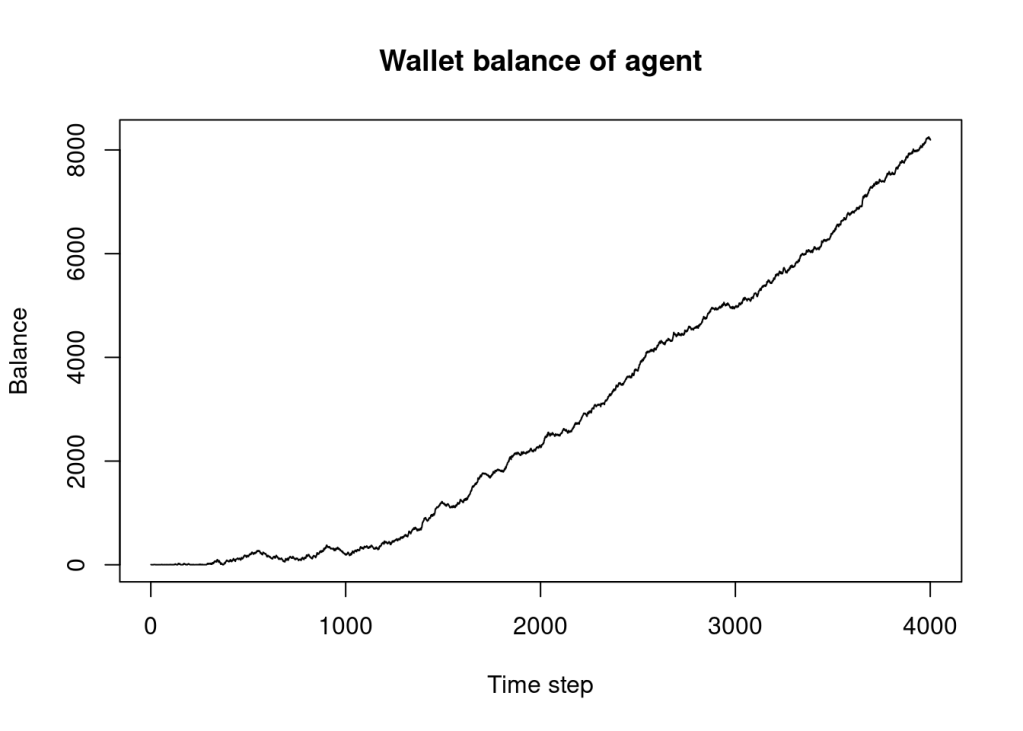

This drop in polarity is coupled with an increase in the wallet balance. The agent appears to begin prioritizing credit accumulation in the wallet over maximizing likes via toxic posts.

At 100 episodes, the agent’s wallet balance shows some peaks and troughs, as though it is trying an alternative strategy. It’s unclear what this new strategy is, though the mean absolute polarity is higher than before, settling at 0.7962013.

At 250 episodes, what looked like desired behavior has morphed into something confounding. Rather than converging towards more objective behavior, the agent learns to be good just long enough to accumulate a sizable wallet balance. Armed with a large wallet balance, it then quickly depletes it in a storm of polarized activity. Once depleted, it takes a few hundred for it to change its behavior. It then repeats the cycle of accumulating a large wallet balance and depleting it quickly with polarized content.

This agent learned how to game my incentive system to maximize likes despite the inclusion of a wallet. That said, the wallet approach with an active penalty for polarized posts does reduce overall toxicity settling at 0.783842 after 4001 steps.

Capping animal spirits

The results of the first experiment suggest that that introducing penalties into an incentive system can regulate agent behavior and reduce toxic behavior. In the first environment, the agent learned a bimodal behavior that switched between healthy and toxic behavior. This is not desirable. It’s better to have an environment where the agent learns to moderate its behavior somewhere between the two extremes.

What would happen if the agent’s wallet had a maximum value? If it can’t accumulate a massive wallet balance to fund toxic behavior, perhaps it will learn a new behavior that has better characteristics. In a second experiment, I introduced a wallet cap of 100 credits.

Capping the wallet has a profound difference on the agent’s behavior. The MAP stabilizes to 0.4945694 after 4001 steps.

The post polarity is no longer bimodal. Instead, individual posts tend to hover around -0.5, with a bit of variation. After all, we don’t expect agents to behave robotically 😉

Remember that the agent’s reward function does not include the wallet balance. The agent is still able to maximize its wallet during most of the episode. Hence, the agent learns that it can maximize its reward with posts that are significantly less toxic.

Conclusion

Introducing a wallet into a social media environment dramatically changes agent behavior. The key is that wallets act as a vehicle for both incentives (rewards) and disincentives (penalties). By capping the maximum value of a wallet, the agent produces less polarized content than when there was no cap on the wallet.

In the next post, I show what happens when an AI moderator is introduced into a multi-agent environment. In that environment, the AI moderator has a reward function based on the health of the overall network. It is able to adjust hyperparameters that affect the reward functions of the social media agents. Stay tuned to see whether an AI moderator can solve the content moderation problem in social media.

Brian Lee Yung Rowe uses AI and machine learning to solve complex social problems. He is CEO of Zato Novo, a data science and software consultancy. Get in touch.